Sometimes Thunderbird mozmill unit tests fail. When they do, it’s frequently a mystery. My logsploder work helped reduce the mystery for my local mozmill test runs, but did nothing for tinderbox runs or developers without tool fever. Thanks to recent renewed activity on the Thunderbird front-end, this has become more of a problem and so it was time for another round of amortized tool building towards a platonic ideal.

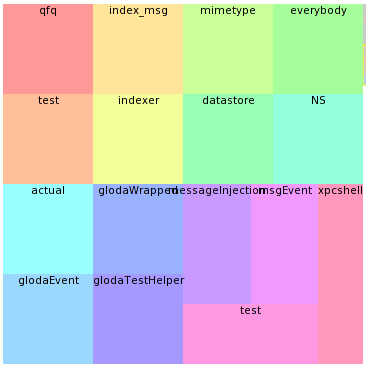

The exciting pipeline that leads to screenshots like you see in this post:

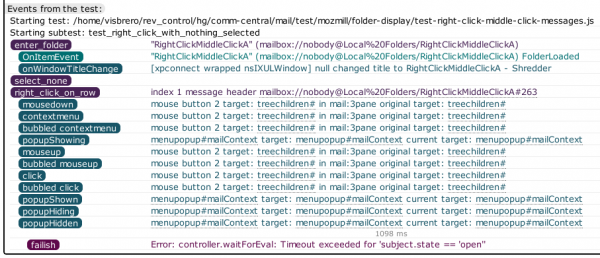

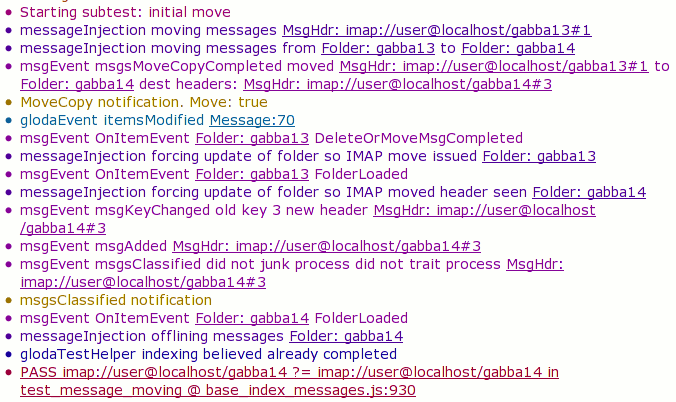

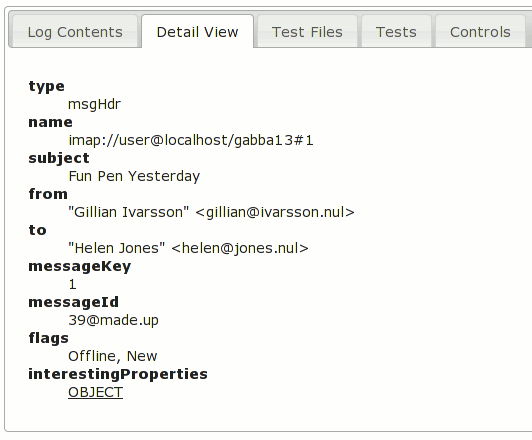

- Thunderbird Mozmill tests run with the testing framework set to log semi-rich object representations to in-memory per-test buckets.

- In the event of a test failure, the in-Thunderbird test framework:

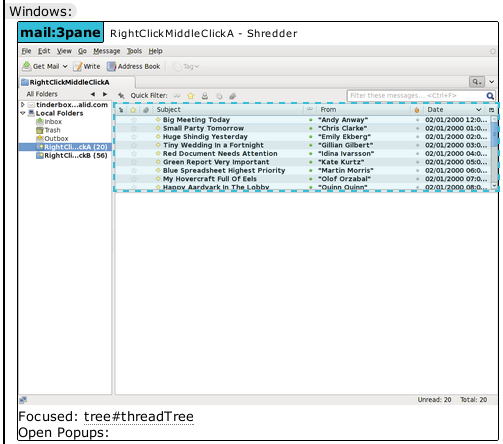

- Gathers information about the state of the system now that we know there is a failure and emit it. This takes the form of canvas-based screenshots (using chrome privileges) of all open windows in the application, plus the currently focused element in the window.

- Emits (up to) the last 10 seconds of log events from the previous test.

- Emits all of the log events from the current test.

- The python test driver receives the emitted data and dumps it to stdout in a series of JSON blobs that are surrounded by magical annotations.

- A node.js daemon doing the database-based tinderboxpushlog thing (like my previous Jetpack/CouchDB work that found CouchDB to not be a great thing to directly expose to users and died, but now with node and hbase) processes the tinderbox logs for the delicious JSON blobs.

- It also processes xpcshell runs and creates an MD5 hash that attempts to characterize the specific fingerprint of the run. Namely, xpcshell emits lines prefixed with “TEST-” that have a regular form to describe when the pending test count changes or when specific comparison operations or failures occur. It is assumed that tests will not comparison check values that are effectively random values, that the comparisons will tend to be deterministic (even if there are random orderings in the test) or notable when not deterministic, and thus that the trace derived from this filtering and hashing will be sufficiently consistent that similar failures should result in identical hashes.

- Nothing is done with mochitests because: Thunderbird does not have them, they don’t appear to emit any context about their failures, and as far as I can tell, frequently the source of a mochitest failure is the fault of an earlier test that claimed it had completed but still had some kind of ripples happening that broke a later test.

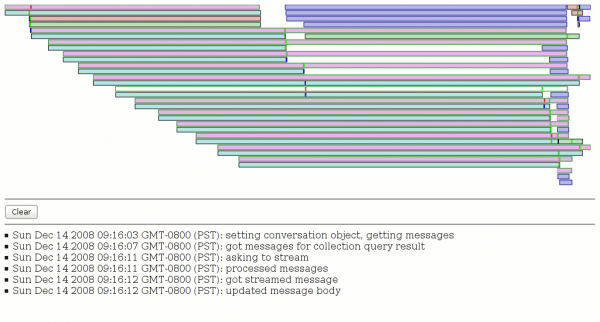

- A wmsy-based UI does the UI thing.

The particular failure shown here is an interesting one where the exception is telling us that a popup we expected to open never opened. But when we look at the events from the log, we can see that what happened is the popup opened and then immediately closed itself. Given that this happened (locally) on linux, this made me suspect that the window manager didn’t let the popup acquire focus and perform a capture. It turns out that I forgot to install the xfwm4 window manager on my new machine which my xvnc session’s xstartup script was trying to run in order to get a window manager that plays nicely with mozmill and our focus needs. (Many window managers have configurable focus protection that converts a window trying to grab focus into an attention-requested notification.)

This is a teaser because it will probably be a few more days before the required patch lands on comm-central, I use RequireJS‘ fancy new optimizer to make the client load process more efficient, and I am happy that the server daemons can keep going for long stretches of time without messing up. The server and client code is available on github, and the comm-central patch from hg.