About the name. David Ascher picked it. My choice was flamboydoc in recognition of my love of angry fruit salad color themes and because every remotely sane name has already been used by the 10 million other documentation tools out there. Regrettably not only have we lost the excellent name, but the color scheme is at best mildly irritated at this point.

So why yet another JavaScript documentation tool:

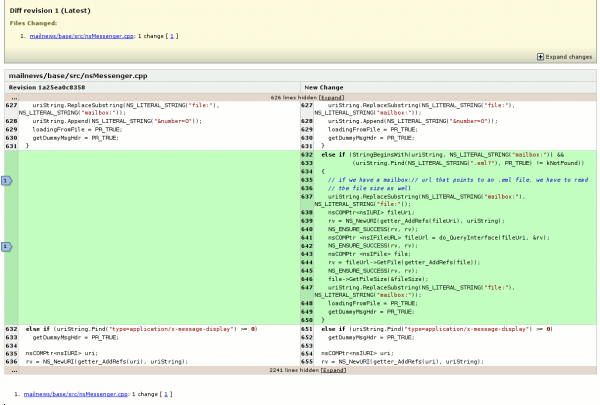

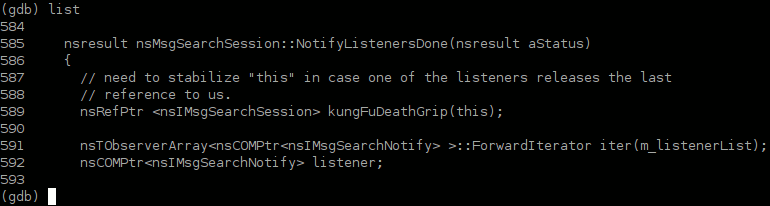

- JavaScript 1.8 support. JSHydra (thanks jcranmer!) is built on spidermonkey. In terms of existing JS documentation tools out there, they can be briefly lumped into “doesn’t even both attempting to parse JavaScript” and “parses it to some degree, but gets really confused by JavaScript 1.8 syntax”. By having the parser be the parser of our JS engine, parsing success is guaranteed. And non-parsing tools tend to require too much hand labeling to be practical.

- Docceleterator is not intended to be just a documentation tool. While JSHydra is still in its infancy, it promises the ability to extract information from function bodies. Its namesake, Dehydra, is a static analysis tool for C++ and has already given us great things (dxr, also in its infancy).

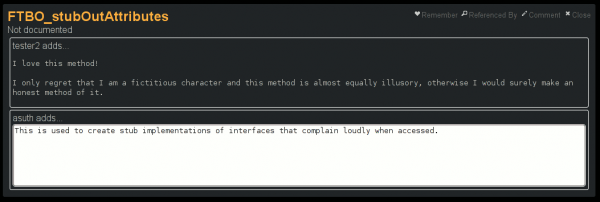

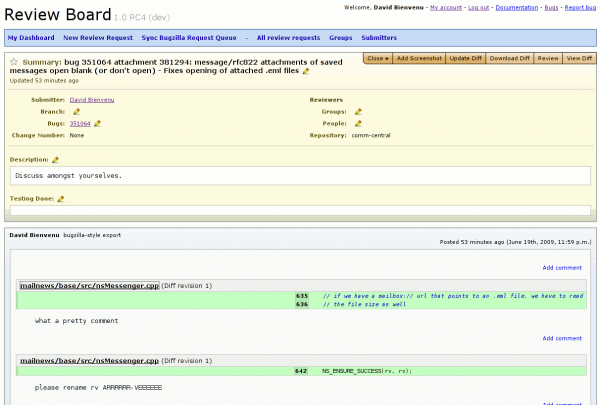

- Support community API docs contributions without forking the API docs or requiring source patches. DevMo is a great place for documentation, but it is an iffy place for doxygen-style API docs. Short of an exceedingly elaborate tool that round-trips doxygen/JSDoc comments to the wiki and user modifications back again, the documentation is bound to diverge. By supporting comments directly on the semantic objects themselves[1], we eliminate having to try and determine what a given wiki change corresponds to. (This would be annoying even if you could force the wiki users to obey a strict formatting pattern.) This enables automatic patch generation, etc.

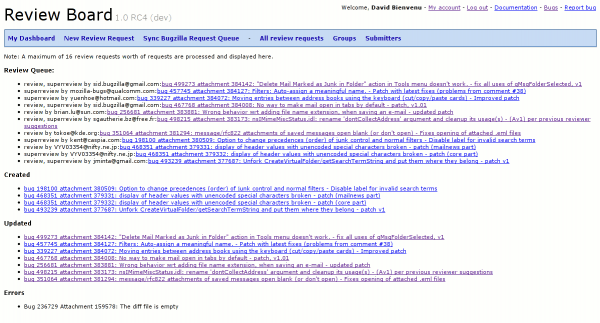

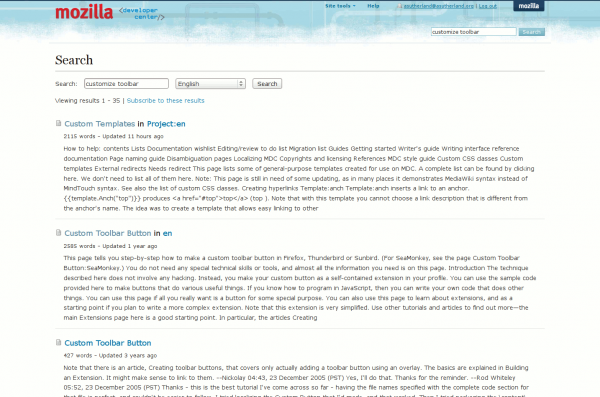

- Mashable. You post the JavaScript source file to a server running the doccelerator parser. You get back a JSON set of documents. You post those into a CouchDB couch. The UI is a CouchApp; you can modify it. Don’t like the UI, just want a service? You can query the couch for things and get back JSON documents. Want custom (CouchDB) views but are not in control of a documentation couch? Replicate the couch to your own local couch and add some views.

- Able to leverage data from dehydra/dxr. Mozilla JS code lives in a world of XPCOM objects and their XPIDL-defined interfaces. We want the JS documentation to be able to interact with that world. Obviously, this raises some issues of where the boundary lies between dxr and Doccelerator. I don’t think it matters at this point; we just need internal and API documentation for Thunderbird 3 now-ish.

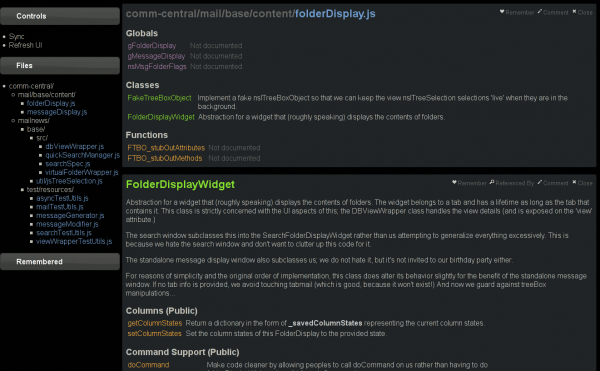

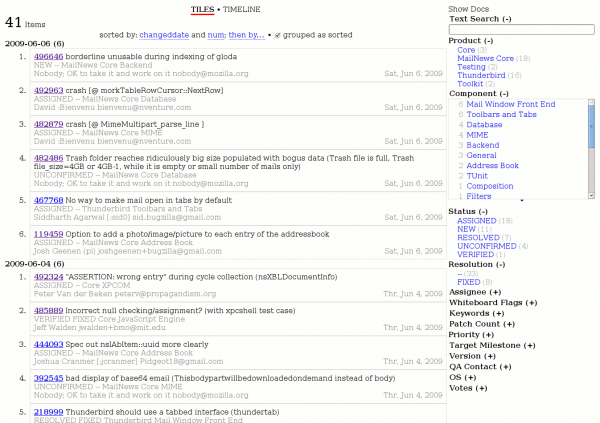

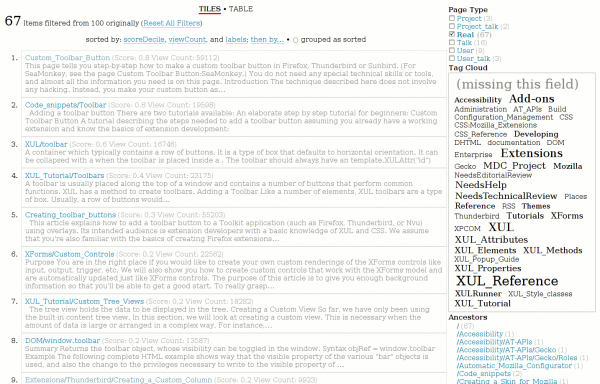

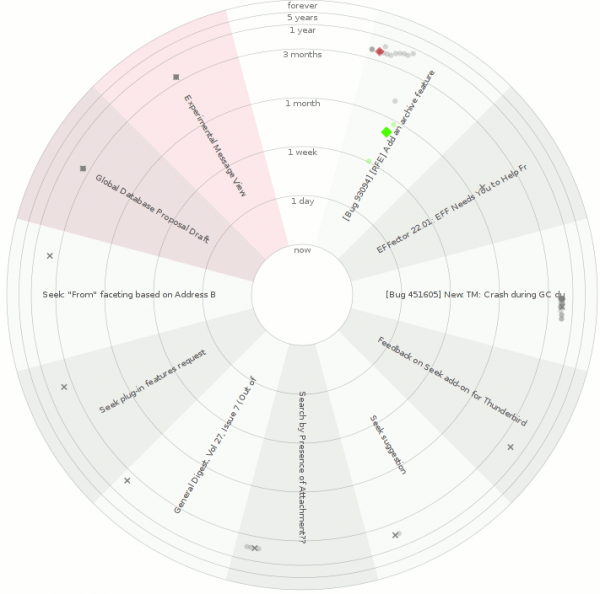

- A more ‘dynamic’ UI. The UI is inspired by TiddlyWiki‘s interface where all wiki “pages” open in the same document. I often find myself only caring about a few methods of a class at any given time. Documentation is generally either organized in monolithic pages or single pages per function. Either way, I tend to end up with a separate tab for each thing of interest. This usually ends in both confusion and way too many tabs.

1: Right now I only support commenting at the documentation display granularity which means you cannot comment on arguments individually, just the function/method/class/etc.

Example links which will eventually die because I’m not guaranteeing this couch instance will stay up forever:

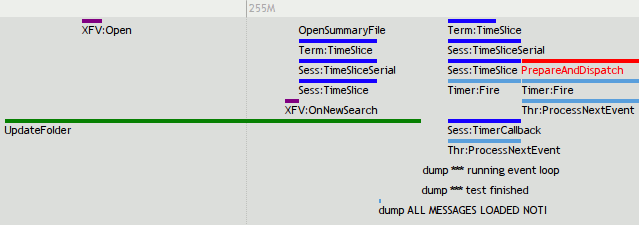

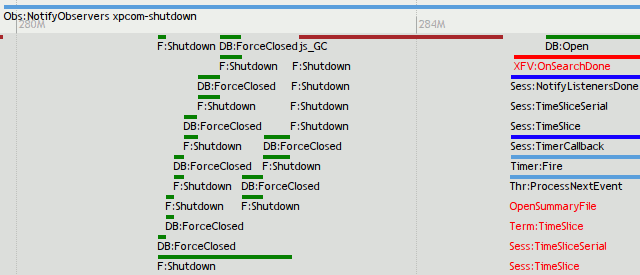

- Live version of the first screen shot of folderDisplay.js and FolderDisplayWidget

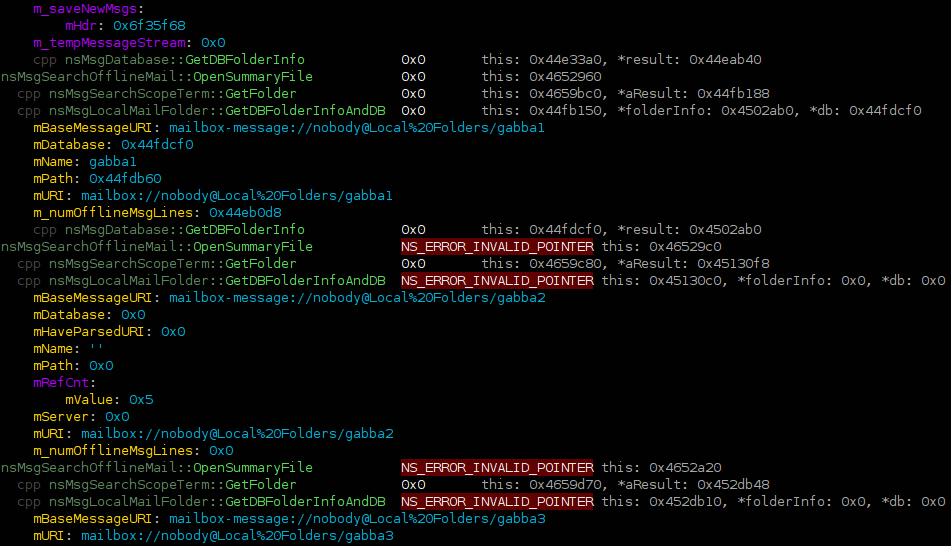

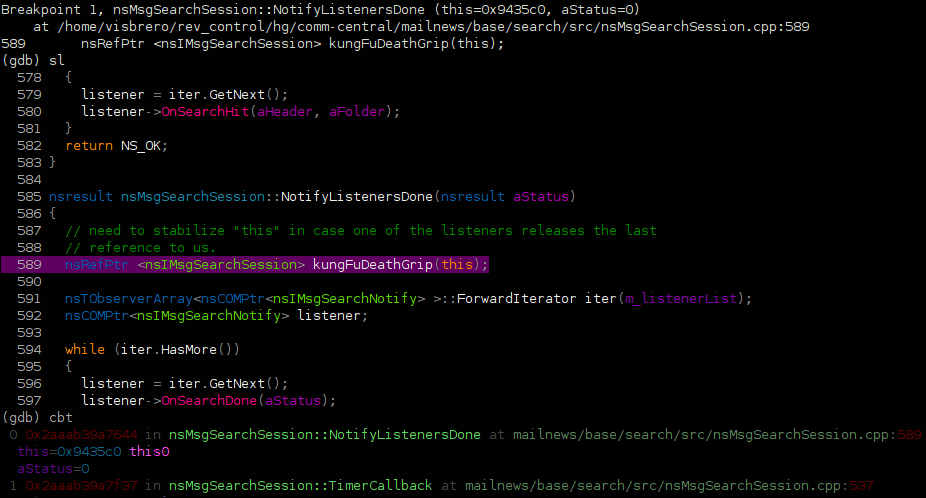

- Live version of the second screen shot of comments on FTBO_stubOutAttributes. Feel free to add your own.

- Live version with nothing up. Click on some files, explore!

The hg repo is here. I tried to code the JS against the 1.5 standard and generally be cross-browser compatible, but I know at least Konqueror seems to get upset when it comes time to post (modified) comments. I’m not sure what’s up with that.

Exciting potential taglines:

- Doccelerator: Documentation from the future, because the documentation was doccelerated past the speed of light, and we all know how that turns out.

- Doccelerator: It sounds like an extra pedal for your car and it’s just as easy to use… unless we’re talking about the clutch.

- Doccelerator: Thankfully the name doesn’t demand confusingly named classes in the service of a stretched metaphor. That’s good, right?