Mailnews’ libmime is one of the harder modules to wrap one’s head around. 8-letter filenames where the first four letters tend to be “mime”, home-grown glib-style OO rather than actual C++, and intermingling of display logic with parsing logic do not make for a fun development or even comprehension experience.

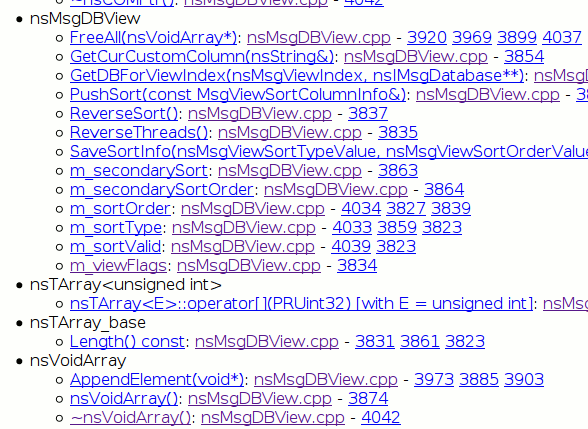

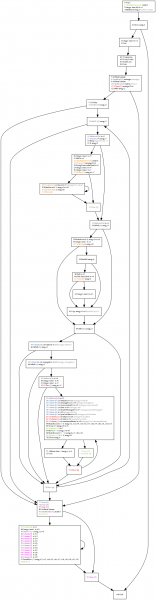

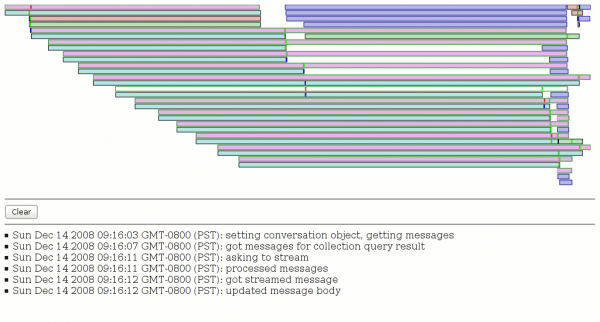

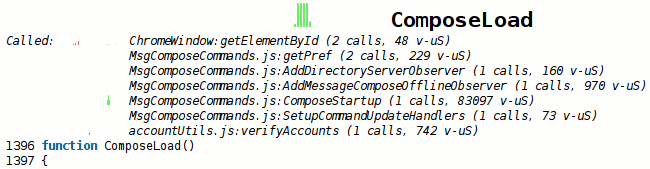

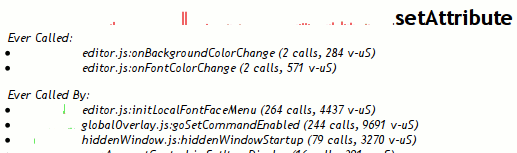

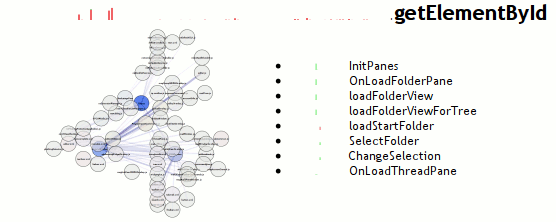

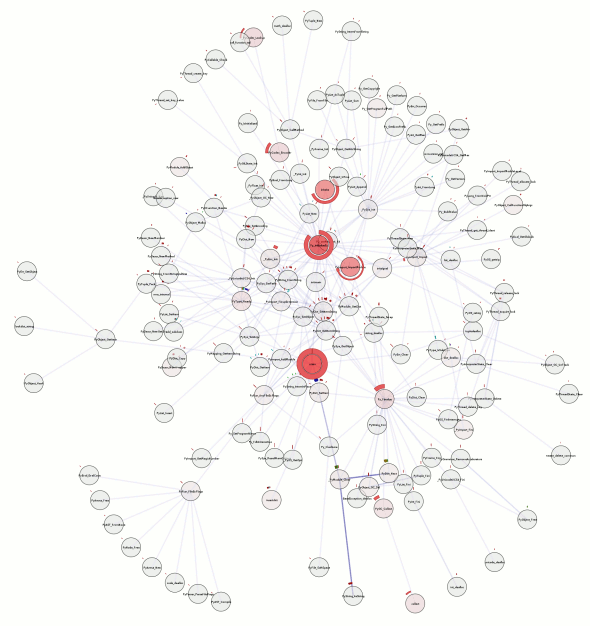

Running an xpcshell unit test run under roc‘s chronicle-recorder and then processing it with my chroniquery stuff (repo info), we get a colorful HTML trace of the execution somewhat broken out (note: pretend the stream_write/stream_completes are interleaved; they are paired). Specific examples of libmime processing for the inquisitive (there are more if you check the former link though):

- simple text/plain: stream_write, stream_complete

- simple text/html: stream_write, stream_complete

- multipart/alternative with text/plain and text/html: stream_write, stream_complete

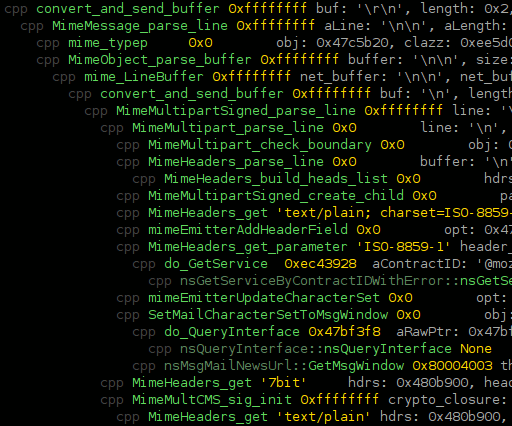

- (fake, not a real signature) multipart/signed SMIME around the previous multipart/alternative: stream_write, stream_complete. Because the SMIME implementation buffers up a lot of stuff, more work happens in the stream_complete (including most output).

- something the JS emitter chokes on!: multipart/related with a text/html and a content-id’d image/png: stream_write, stream_complete.

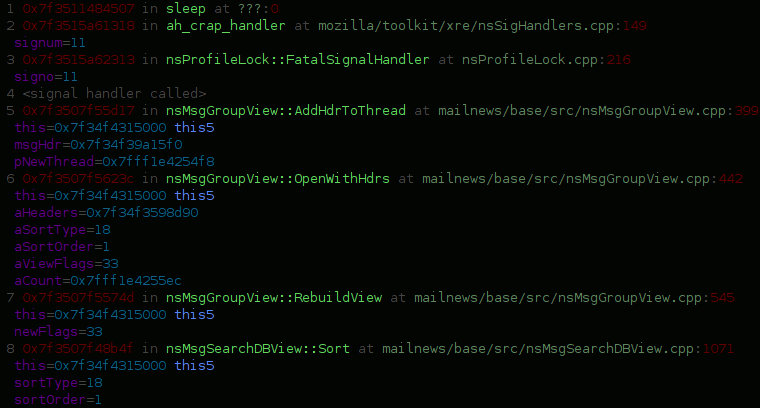

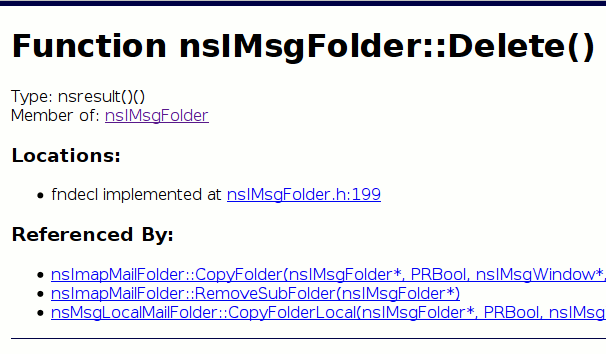

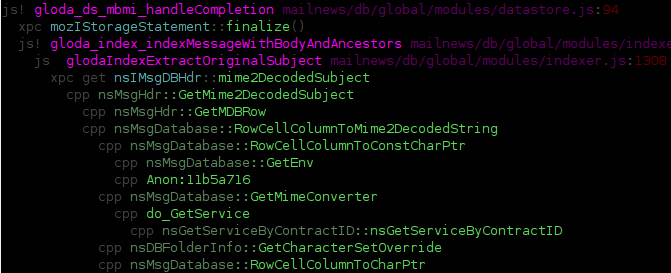

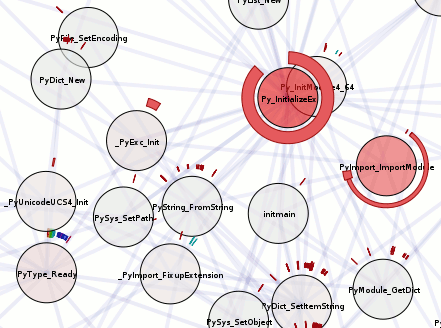

The thing that kickstarted this exciting foray is the error pictured in the screenshot above from an earlier run. The return values are in yellow, and you can see where the error propagates from (the -1 cast to unsigned). If you look at the HTML file, you will also note that the file stops after the error because the functions bail out as soon as they hit an error.

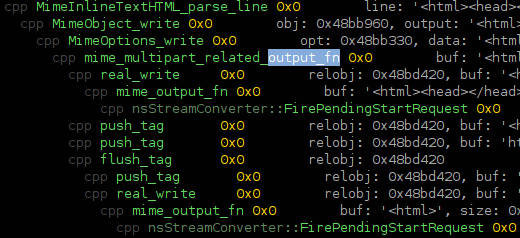

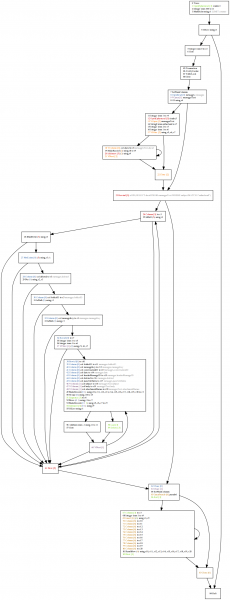

However, our actual problem and driving reason for the unit tests is the JS emitter choking on multipart/related in writeBody (which it receives by way of ‘output_fn’). Why? Why JS Emitter?! (You can open the links and control-F along at home!)

- We look at the stream_write trace for our multipart/related. That’s odd, no ‘output_fn’ in there.

- We look at the stream_complete trace for the multipart/related. There’s our ‘output_fn’! And it’s got some weird HTML processing friends happening. That must be to transform links to the related content into something our docshell can render. This also explains why this processing is happening in stream_complete rather than stream_write… it must have buffered up the content so it could make sure it had seen all the ‘related’ documents and their Content-IDs so it could know how to transform the links.

- Uh oh… that deferred processing might be doing something bad, since our consumer receives a stream of events. We had to do something special for SMIME for just such a reason…

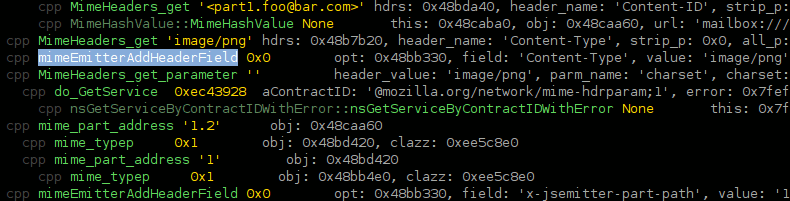

- We check stream_complete for ‘mimeEmitterAddHeaderField’ calls, which the JS emitter keys off of to know what part is currently being processed and what type of body (if any) it should expect to receive. Uh-oh, none in here.

- We check stream_write for ‘mimeEmitterAddHeaderField’ calls, specifically with a “Content-Type” field. And we find them. The bad news is that they are apparently emitted as the initial streaming happens. So we see the content-type for our “text/html”, then our “image/png”. So when stream_complete happens, the last thing our JS emitter will have seen is “image/png” and it will not be expecting a body write. (It will think that the text/html had no content whatsoever.)

- Khaaaaaaaaaaaaaaaaaaaaaaaaan!

In summary, unit tests and execution tracing working together with pretty colors have helped us track down an annoying problem without going insane. (libmime is a lot to hold in your head if you don’t hack on it every day. also, straight debugger breakpoint fun generally also requires you to try and formulate and hold a complex mental model… and that’s assuming you don’t go insane from manually stepping aboot and/or are lucky with your choices of where you put your breakpoints.) The more important thing is that next time I want to refresh my understanding of what libmime is up to, I have traces already available. (And the mechanics to generate new ones easily. But not particularly quickly. chronicle and my trace-generating mechanism be mad slow, yo. It may be much faster in the future to use the hopefully-arriving-soon archer-gdb python-driven inferior support, even if it can’t be as clever about function-call detection without cramming int 0x3’s all over the place.)

Useful files for anyone trying to duplicate my efforts: my ~/.chroniquery.cfg for this run, the unit test as it existed, and the command-line args were: trace -f mime_display_stream_write -f mime_display_stream_complete -c -H /tmp/trace3/trace.html –file-per-func-invoc