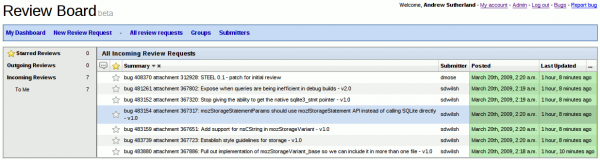

Review board is a delightful django-based review tool. Whereas bugzilla’s diff display is limited to taking the provided diff and coloring hunks without additional context, review board actually retrieves the underlying files from mercurial, applies the patch, colorizes the source files (using pygments), and thus can give you additional context. Also, it allows you to click on line(s) and attach a note at that location.

Anywho, I made a new tool that ‘synchronizes’ bugzilla request queues with review board. It finds the things in your request queue, and then creates a review in review board, transferring the patch. There is some limited hard-coded biasing towards comm-central, but it’s arguably easily adaptable. It uses a slightly hacked up version of the post-review script found in review board’s contrib directory to serve as the API to review board.

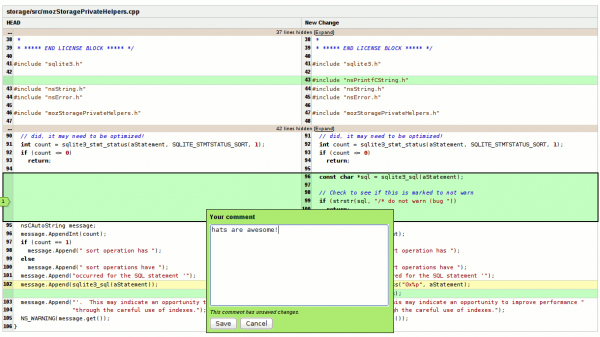

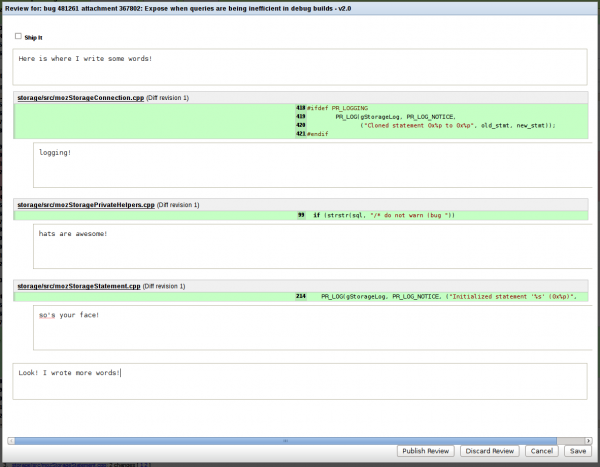

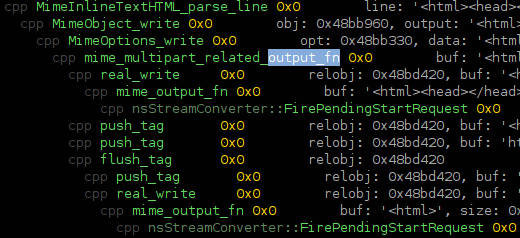

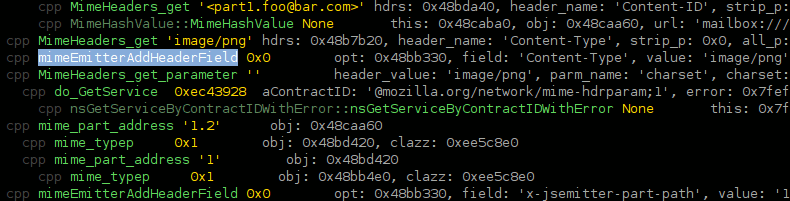

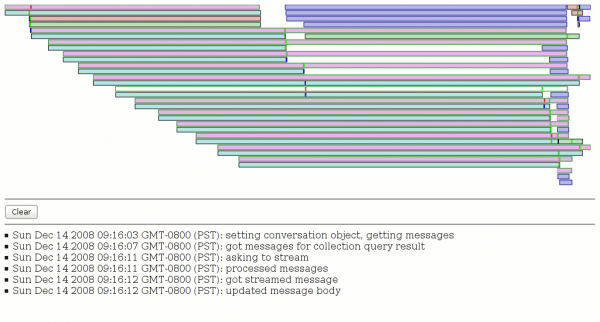

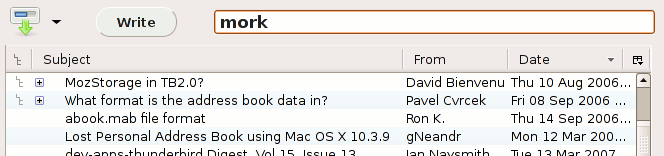

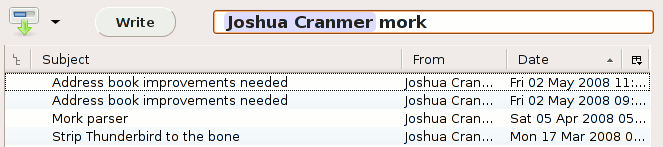

The intent is to make it easier for people, or at least me, to do reviews. I am definitely NOT proposing that the review “of record” live outside bugzilla. But I think it might make it easier to prepare reviews in the first place. For example, see the picture below? It’s the result of my clicking on lines of code and making notes. And those hyperlink-looking things that look like they might take you to the actual context, rather than manually having to try and determine context from people’s comments in the bugzilla notes? They are actual hyperlinks that do what I just said!

If you are a mailnews hacker and would like to see your review queue in my reviewboard instance, ping me on IRC. I may have already processed your queue and made you an account, or maybe not. The review board API does not yet automate user creation and I got tired of doing things manually and gave up.

Warnings:

- Things go awry on old-school patches. For example, a patch that thinks it is against (the CVS layout of) “mozilla/mailnews” appears to not work. The right answer is a patch against “a/mailnews” (I’m not sure if the git-style is essential). Things worked fine for my queue, but some people with old stuff in their queues experienced glitches. The script now inserts “problem!” into the summary when this happens, but in my reviewboard instance there may be some reviews that pre-date this logic. (There should be a note in the description though. Also, there will obviously be no diff attached.)

- I initially forgot about the whole review=one person, super-review=another person, and maybe review=yet another person. Although this is now fixed and reviews target all requested revieweres, there may be some reviews missing from your queue because they were created for one of the dudes who was not you and my manual nuking didn’t get to those.

- Uh, it creates the reviews, but it doesn’t destroy them.

- Also uh, there will be scaling issues if the reviews don’t eventually get closed out.